What it is and how it works.

Log shipping is the process of transferring transaction logs from one server to another – shipping them. It is used as a form of disaster recovery for a database. It does not have any automated fail over – transfer to the new database will involve human intervention.

Two servers are involved, the Primary server and the Secondary Server. The Primary server is the server with the database that is being protected. The secondary server holds the backup copy of the database.

The database to be protected needs to be in Full or Bulk Logged recovery mode. This is to stop the transaction logs being truncated. A full backup should then be taken and applied to the secondary server. The option No Recovery is used when restoring on the secondary server. This leaves the database in a restoring state. No log shipping is set up and transaction log backups are copied from the primary server to the secondary server and then applied to the restoring database. This has the affect of keeping the database in step with the primary version.

As an extra process login accounts need to be transferred to the secondary server. This way if the database ever has to be brought on line and used access will be the same as it is on the primary server.

How to set up Log Shipping

In this step by step guide I will set up log shipping on the DataImports database from serverA to serverB.

Create a directory on the primary server

On the primary server create a directory for the transaction log backups. The backups will be made into this directory and then copied across to a receiving directory on the secondary server.

I create this under the main maintenance or sql backup directories.

If there is not one there already create a folder called LS. Then create a sub-folder with the name of your database: \\serverA\m$\Backups\LS\dataImports

Create a directory on the secondary server

On the secondary server create a shared directory for receiving the log shipped files.

Again I create this main maintenance or sql backup directories.

If there is not one there already create a folder called LS. Then create a sub-folder with the name of your database: file://serverb/f$/Maint/LS/dataImports

Exclude the database from TLog backups

If you have any maintenance plans which carryout Transaction Log backups for this database you will need to exclude it.

Set recovery model of the database to Full

If the recovery model of the database is simple set it to Full. You can leave it if it is set to Bulk Insert.

Backup the database

Take a full backup of the database in to the folder you created on the primary server and copy it to the new folder on the secondary server.

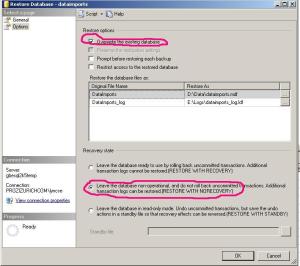

Create a database on the secondary server and then restore the backup

Create a new database with the same name as the one you are going to log ship.

Now restore the database using the backup you have just taken – make sure you set the file paths to restore over the files you have just created and not try to use the paths the backup sets.

You must select the option to restore with no recovery.

The database name will now have a green arrow next to it and say restoring …

You can also delete the backup file you used for the restore.

Configure Log Shipping

In SSMS right click on the database name and select properties.

Click on Transaction Log Shipping. Now tick the box which says “Enable this as a primary database in a log shipping configuration”

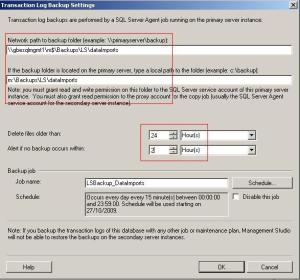

The button ‘Backup Settings’ will now be enabled. Click this and fill out the options.

You should enter the full path to the folder on the primary server. Underneath this you should also enter the local path. In my example the dataimports data rarely changes so I have set different values from the default. I am deleting files older than 24 hours – 72 is the default. I am alerting on 2 hours where 1 is the default. Next click on the schedule button. As the database I am using does not change very often I have scheduled my backups to take place once an hour. The default is every 15 minutes.

You are now on the configuration screen again. There is a white box where it says secondary server instances and databases. Underneath the box click add. Click on the button at the top right which says connect. Enter the secondary server details. The database should be selected by default.

Now you are back on the secondary database settings make sure the option ‘no, the secondary database is initialised’ has been selected. Now click the Copy Files tab. In this screen you need to enter the path for the directory on the secondary server: file://serverb/f$/Maint/LS/dataImports

I change the deleted copied files option to 24 hours again. Now click schedule. I change this to run every hour again – the default is 15 minutes. This time I will also change the start time to 00:15. There is no point it trying to copy the files on the hour as the actual backup is being taken on the hour. Click Ok and that task will be scheduled.

Now click on the Restore Transaction Job task. I change the delay option to 5 minutes and the alert option to 2 hours. This is because I have a slow rate of change and so only copy every hour. Click on schedule again. This time I will set the task to run at 30 minutes past the hour and to run hourly. Click ok and then on the next screen click on again.

You are now on the properties page again.

Click ok again and the server will create the log shipping jobs. Once the two items have gone green you can click close.

Run the Log Shipping Jobs.

On the primary server there will be a job named like LSBackup_DatabaseName.

Run the LSBackup_DatabaseName job and check that a trn backup file has appeared in the folder you created on the primary server.

On the secondary server look for a job like LSCopy_PrimaryServerName_DatabaseName and run this job. You should see the trn file appear in th efoledr you created on ServerB. Now run LSRestore_PrimaryServerName_DatabaseName.

Finally Transfer Logins

You should use SSIS to create a transfer logins task to copy the logins from serverA to serverB. This way if you have to fail the database over to serverB you can enable to logins and won’t have any permissions problems.